Click the link below the picture

.

People generally don’t confuse the sounds of singing and talking. That may seem obvious. But it’s actually quite impressive—particularly when you consider that we are usually confident that we can discern between the two even when we encounter a language or musical genre that we’ve never heard before. How exactly does the human brain so effortlessly and instantaneously make such judgments?

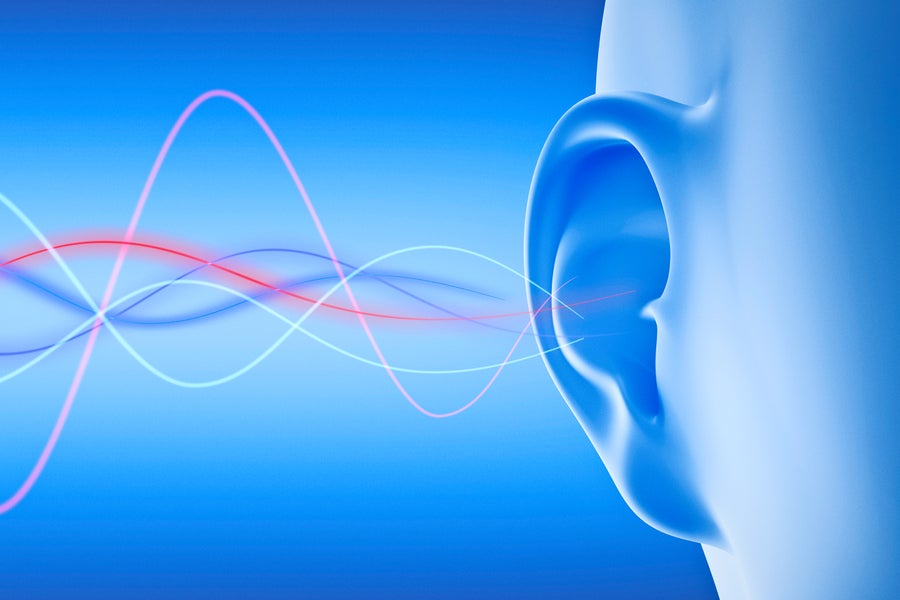

Scientists have a relatively rich understanding of how the sounds of speech are transformed into sentences, and how musical sounds move us emotionally. When sound hits our ear, for example, what’s actually happening is that sound wavesare activating the auditory nerve within a part of the inner ear called the cochlea. That, in turn, transmits signals to the brain. These signals travel the so-called auditory pathway to first reach the subregion for processing all kinds of sounds, and then to dedicated music or language subregions. Depending on where the signal ends up, a person comprehends the sound as meaningful information and can distinguish an aria from a spoken sentence.

That’s the broad-strokes story of auditory processing. But it remains surprisingly unclear how exactly our perceptual system differentiates these sounds within the auditory pathway. Certainly, there are clues: music and speech waveforms have distinct pitches (tones sounding high or low), timbres (qualities of sound), phonemes (speech sound units), and melodies. But the brain’s auditory pathway does not process all of those elements at once. Consider the analogy of sending a letter in the mail from, say, New York City to London or Taipei. Although the letter’s contents provide a detailed explanation of its purpose, the envelope must include some basic information to indicate its destination. Similarly, even though speech and music are packed with rich information, our brain needs some basic cues to rapidly determine which regions to engage.

The question for neuroscientists is therefore how the brain decides whether to send incoming sound to the language or music regions for detailed processing. My colleagues at New York University, the Chinese University of Hong Kong, and the National Autonomous University of Mexico and I decided to investigate this mystery. In a study published this spring, we present evidence that a simple property of sound called amplitude modulation—which describes how rapidly the volume, or “amplitude,” of a series of sounds changes over time—is a key clue in the brain’s rapid acoustic judgments. And our findings hint at the distinct evolutionary roles that music and speech have had for the human species.

Past research had shown that the amplitude modulation rate of speech is highly consistent across languages, with a rate of four to five hertz, meaning four to five ups and downs in the sound wave per second. Meanwhile, the amplitude modulation rate of music is consistent across genres, at about 1 to 2 Hz. Put another way: when we talk, the volume of our voice changes much more rapidly in a given span of time than it does when we sing.

Given the cross-cultural consistency of this pattern in past research, we wondered whether it might reflect a universal biological signature that plays a critical role in how the brain distinguishes speech and music. To investigate amplitude modulation, we created special white noise audio clips in which we adjusted how rapidly or slowly volume and sound changed over time. We also adjusted how regularly such changes occurred—that is, whether the audio had a reliable rhythm or not. We used these white noise clips rather than realistic audio recordings to better control for the effects of amplitude modulation, as opposed to other aspects of sound, such as pitch or timbre, that might sway a listener’s interpretation.

.

Peterschreiber.media/Getty Images

Peterschreiber.media/Getty Images

.

.

Click the link below for the article:

.

__________________________________________

Leave a comment