Click the link below the picture

.

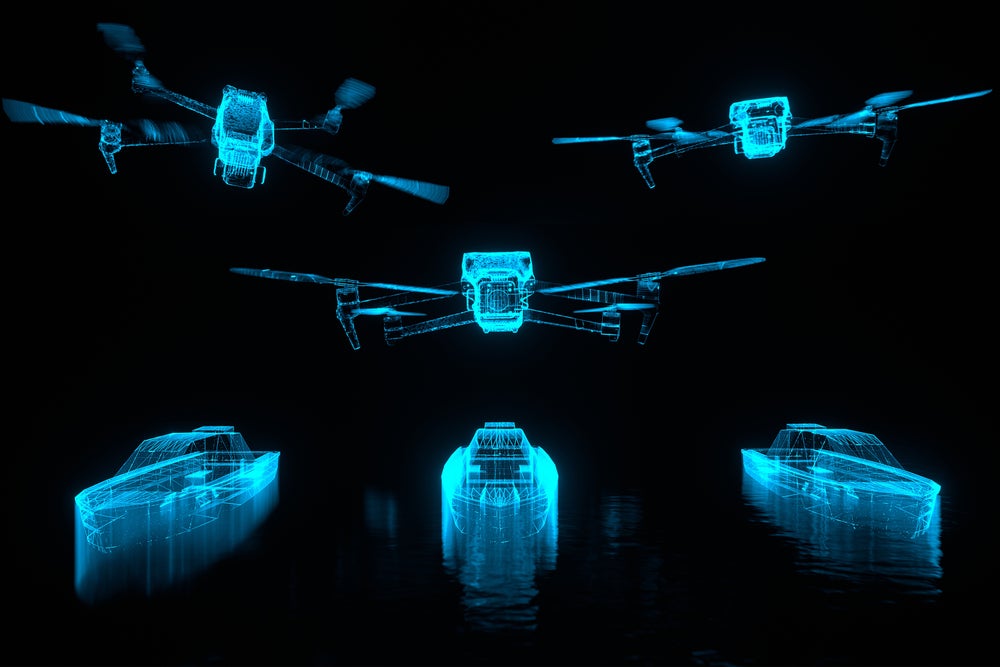

Imagine a weapon with no human deciding when to launch or pull its trigger. Imagine a weapon programmed by humans to recognize human targets, but then left to scan its internal data bank to decide whether a set of physical characteristics meant a person was friend or foe. When humans make mistakes, and fire weapons at the wrong targets, the outcry can be deafening, and the punishment can be severe. But how would we react, and who would we hold responsible if a computer programmed to control weapons made that fateful decision to fire, and it was wrong?

This isn’t a movie; these were the kinds of questions delegates considered at the April Conference on Autonomous Weapons Systems in Vienna. In the midst of this classically European city, famous for waltzes, while people were picking up kids from school and having coffee, expert speakers sat in special high-level panels, microphones in hand, discussing the very real possibility that the development and use of machines programmed to independently judge who lives and who dies might soon be too far gone to come back from.

“This is the ‘Oppenheimer moment’ of our generation,” said Alexander Schallenberg, the Austrian federal minister for European and international affairs, “We cannot let this moment pass without taking action, now is the time to agree on international rules and norms to assure human control.”

I reported on these meetings, these extraordinary discussions about our future happening parallel to the ordinary moments of our present, as the United Nations reporter for a Japanese media organization—Japan being the only nation in history to have experienced nuclear bombings, and thus very interested. And Schallenberg’s statement rang true: the need for international rules to prevent these machines from being given full rein to make life and death decisions in warfare is bleakly urgent. But there is still very little actual rulemaking happening.

The decades following the first “Oppenheimer moment” brought with it a simmering cold war and a world on the edge of a catastrophic nuclear apocalypse. Even today there are serious threats to use nuclear weapons despite their capacity to collapse civilization and bring human extinction. Do we really want to add autonomous weapons into the mix? Do we really want to arm computer screens that could mistake the body heat of a child for that of a soldier? Do we want to live in a world inhabited by machines that could choose to mass bomb a busy town square in minutes? The time to legislate is not next week, next year, next decade. It is now.

.

Andrey Kulagin/Getty Images

Andrey Kulagin/Getty Images

.

.

Click the link below for the article:

.

__________________________________________

Leave a comment